When Business Decisions Trump Technical Performance Scores

Posted on Feb, 2015 by Admin

The following article is a guest post by Scott Moore.

Performance is important to me – I think most of you know that about me and it would be an understatement. I am also one of those people who are never satisfied with just doing things half way. I feel that way about the work that I do for myself and the partners I work with.

In an effort to ensure that we follow the same advice that we give to clients, I decided to go through an exercise with one of our partner’s webmaster to look into performance of the end user experience on their web site.

Before our partner implements major changes to their site, they always execute formal functional testing and performance testing activities. However, I wanted to see performance from an end user perspective and a third party to see if they are doing all they can to optimize the site. I decided to look at GTMetrix and YSlow scores and take a look at their recommendations.

The first score with GTMetrix was 79% (a grade of C), and the YSlow score was 73%. The performance engineer in me initially shrieked because I expect nothing but 100% on performance, but the business side of me needs to keep in mind that it isn’t always about having the highest score. There’s no direct competition to see who has the highest score from a single page request test.

What’s really important is what the end user experience is. Of course we want it to be fast, but there needs to be context. Their web analytics tells me that the visitors are pretty happy with the content, but I wanted to know more. So I continued with this exercise and see what could be discovered.

GTMetrix and YSlow Recommendations

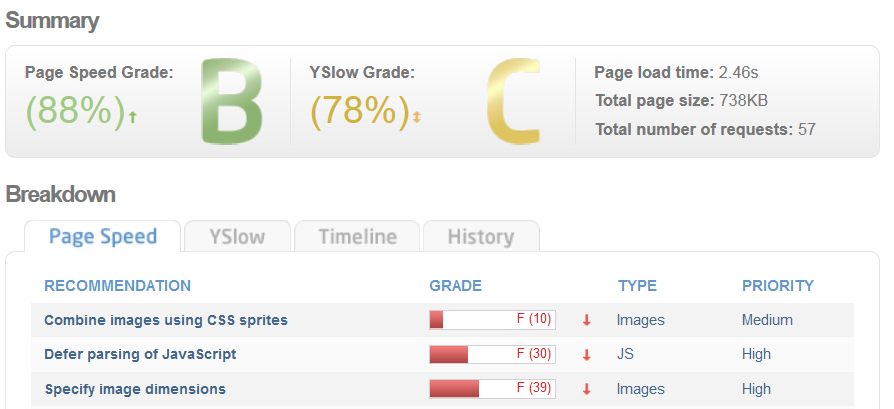

We did find a piece of low hanging fruit. The browser caching of static assets was set at 6 hours. It was supposed to be set at 1 week. Thank you GTMetrix! This raised the GTMetrix score to 88% (a respectible B) and the YSlow score went up to 79%.

As the webmaster delved into each recommendation, he had to determine if the improvement in performance was worth the time, money, and effort to implement it just to get a better score. As we continued to dive into the details, the performance engineer in me was surprised by decisions made as a result of this effort.

First, consider that the GTMetrix and YSlow grading system aren’t targeted towards business objectives or the typical end user experience. They are good guidelines to follow, but the scoring is a little harsh when you consider the benefits. A business has to make a decision regarding what is best in terms of cost and the ROI of the underlying changes that the guidelines suggest.

User experience (UX) has to be taken into account. In fact, UX is KING! If a website page loads from the users perspective in under 1.5 seconds, but the GTMetrix score is a “C” – is that a bad score? the webmaster would argue it isn’t.

If changes are made to the site that raise the score to an A, there may be other unintentional side-effects that are negative from a UX or business perspective. It might to cost money and time with no increase in revenue. The partner’s website is more than a brochure-style website. It is dynamic in nature, both due to content and also due to consistent improvements, changes and additions to the design and features.

Here is the real kicker – regardless of the score, they still have a better page load time and UX than other companies in the same space – AND, they do it with SSL enabled! Not only does the DOM load faster, but all of the assets load faster and are more optimized than other similar web sites.

The business man in me is listening to the webmaster asking “Why should we waste time and resources improving what we already do better than others? Because of a score?” OK, I get it.

To humor me, the webmaster broke it down based on each recommendation provided by GTMetrix and why they should be ignored:

Combine images using CSS sprites

- Sprites started gaining popularity in 2003, when internet speed was more of an issue than today.

- Browsers used to be limited to two HTTP requests connections. Since IE 8, this is no longer true. 6+ are now standard. (HTTP/2 will change this in the coming years).

- Creating a sprite means that both the Sprite and the CSS has to be modified every time we want to add an image that is a candidate for sprites. This increases coding time.

- Not every image is a candidate for a Sprite. A human is a better judge of this than a report score.

- The site, overall, may become less accessible.

Summary: There is no added perceivable benefit to the UX, and it makes the web developers’ life more of a headache in this scenario. Overall, the ROI here is negative.

Defer parsing of JavaScript

Where possible, this is already done. If not, it is for the following reasons:

- JavaScript parsing during page load is not always a bad thing. In this case, only two of the assets should load before the page.

- Some of the assets are not differed because of the underlying platform our site is built on, or a related plugin implementation. Modifying core platform or related plugin adds overhead for every subsequent update.

- Many of these assets are trivial. With today’s computing power, assets that are less than 15 kiB are not going to degrade the UX. The report is quite harsh in this regard.

Summary: Again, negative ROI.

Specify image dimensions

Fair enough. We have 3 out of 53 images that should have dimensions in the HTML. So 6% of the assets equates to an “F” (39%). Really?

Summary: No perceivable benefit to the UX – negative ROI.

Optimize the order of styles and scripts

Some of this is controlled by the underlying technology or related plugins. Modifying these adds overhead for every subsequent update of the applicable software.

Summary: No perceivable benefit to the UX – negative ROI.

Remove query strings from static resources

This is a nice recommendation, but really doesn’t provide much weight. In the case of this site, the query strings are required to control the design and are manually generated.

GTMetrix states, “Resources with a ‘?’ in the URL are not cached by some proxy caching servers.” This is correct, but in this case a human is smarter than the report. We should not care if SQUID pre v3.0 doesn’t cache the assets because the cache server and load balancer does.

Optimize images

As a general practice, this is already done. There is a potential to reduce the size by 27.4KiB, according to this report. The time it will take to implement the change across 19 assets isn’t significant. This might be a good thing to do when the team is sitting around with nothing to do. Until then, most of the target audience can handle this minor overhead.

The Rest

The only perceived benefit is getting a better score on the report.

The Business Wins

The performance engineer in me still wants to have the fastest site in the world. The business man in me listens to wise council and understands there actually is a line in the sand called “good enough”. There is a fine line in performance engineering, and in life in general, where commitment to excellence turns into an unhealthy drive to perfectionism.

Sometimes businesses make decisions IT doesn’t agree with, and in the bigger picture it may be the right thing to do. While I have great respect for GTMetrix, YSlow and all the other vendors who are out there trying to make the web faster, sometimes there are good reasons for not implementing performance improvements.

As always, thoughts, feedback, and pithy comments are welcome.