Performance Center of Excellence: Encapsulation or Enablement?

Posted on Mar, 2004 by Admin

Note: This article was originally posted on Loadtester.com, and has been migrated to the Northway web site to maintain the content online.

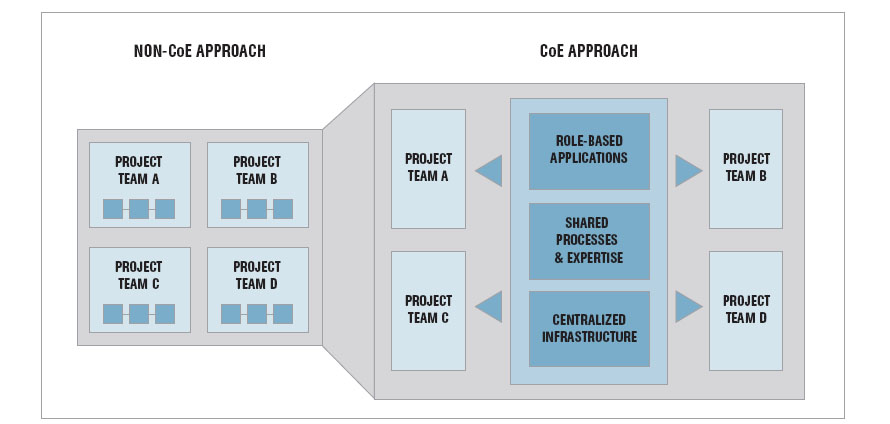

Those of you following my columns know that I have laid out in some detail how to begin building a Center of Excellence (COE) around performance testing, and centralizing it. Now I want to expound on three models that performance testing groups generally follow. Encapsulation refers to a silo approach. Enablement means allowing resources from other groups to self-govern their performance. Enablement does not mean totally distributed, but a balance between distributed and centralized. Use the examples in each model to help you determine where your organization is at and how to reach your end goal of creating a solid Performance COE.

Encapsulated Model – Silos

This can be the result of large companies with immature processes and methodologies. Sometimes there are so many organizations in a company that many departments are working on the same thing from a different angle. The right hand does not know what the left hand is doing. From a performance perspective, you might have three groups with their own testing team with the same or different testing tools. Most likely they have their own way of doing things. Each department does their own thing and nothing is consistent across the teams. One group uses Rational RUP, another Mercury Interactive with their best practices, and still another uses only open source tools and ad hoc reports. Avoiding chaos in this situation is by accident, not design. There is no “center” of excellence here. Each team knows how their specific application perform, but may not have considered other kinds of testing approaches. This is a problem that needs to be solved.

This is also the model when there is the need for only one lab with a single point of contact for performance testing. In general, the more focused the group is, the better the encapsulated model works. For small shops, perhaps a single lab is all that will ever be necessary and the encapsulated model will work very well in this case. This is the fastest way to get ramped up for any company who has not been doing any performance testing in the past. As the organization grows, the decision to go to multiple labs would depend on the return on the investment. But it is important to avoid the chaos I have described above where everyone does it differently. This is a lot of double work for no reason.

Hopefully, those of you who see this situation (many silos within one company) will read this and be inspired to get together with all of the other teams and sort out what everyone else is doing. Find out how much maintenance your are paying on your licenses and just how much downtime each lab has over a period of time. Compare deliverables (final reports, test results, etc) and see how each team does them. Find out if there are best practices from all groups that can be combined into one solid way of doing things. Are there standard requirements for load test results (i.e. no more than X% on the CPU or transaction times no higher than X)? Are there ways to combine infrastructure? Once these types of things are out on the table and discussed, it may lead to consolidated efforts to better service the projects. What would the next step look like? Glad you asked….

Encapsulated Model – Consolidated

An attempt is made to consolidate resources. It is still a silo lab and performance group, but for all projects across the organization. After the discussions mentioned before, hopefully the light comes on and the idea for a single performance lab is built and resources are isolated around it. Just as before with single groups, all projects go into the lab when they are ready to be tested, and they come out either passing or failing the requirements. The difference is that now you have a core group of people who have agreed to use a standard set of tools, methods, and deliverables across projects. This reduces the internal cost of setting up and running performance tests because each time the methodology is followed it gets better and faster. Lessons learned help create best practices. Project managers begin to understand how the system works will return to the lab on the next project they work on. This is how standardization and centralizing of resources allows you to move towards a Center of Excellence.

The lab is not usually active 24/7 because there is the need to create scripts, fill out documentation, meetings for organizing future tests. This means you could have more than one performance engineer with only one performance lab. One person may do all the prep work Mon-Wed and run tests on Thu-Fri. The other preps Web-Fri and runs test on Mon-Tues. For the performance staff, it is a must to have a mechanism for scheduling tests. Whether this is an online calendar, or just an e-mail, scheduling becomes more important the more labs you have. Keeping track of what tests are running on what hardware can get pretty hairy when you have database clusters that have multiple database from multiple apps. How about web servers hosting multiple sites on the QA servers? What about VMWare machines with multiple environments used in testing? The last thing you want is two people load testing applications on shared boxes skewing the results. Coordination is key.

Many times developers and project managers don’t know they need performance testing until they actually do it once. When major things are found (like improving performance over 300% with a few tweaks), everyone looks better because it was found before it was released to production, saving time and money. The lab becomes akin to the local StarBucks. Once the client gets a taste of it, it won’t be long and they’ll be back. As the performance lab becomes more of a draw to clients, rather than trying to push it down their throats, the work load will increase and it will come to the point where developers are asking the engineer to get involved with short tests runs and “what if” scenarios for every little thing. I like to call these “science experiments”. At first this is great because it means people are recognizing the value of performance testing. On the other hand, when the amount of applications one engineer supports increases, it becomes harder to schedule time for the science projects and frustration begins. The normal fix for this is usually a bigger budget for an additional lab and other resources. If two labs are available, you can do twice as many projects and have more time to handle the science projects as well, right? Unfortunately, it doesn’t work this way, but it sounds good at the time.

At this point, let’s assume the performance team has progressed exponentially. They’ve doubled the department headcount and added an additional testing lab and the work keeps coming in. Before long, management can’t feel good about the risk of any project without having a load test beforehand. You hear the suggestion for another lab and more resources to handle additional projects slated for next year. More budget money is not always going to be the right answer for more capacity. Another approach needs to be considered to increase efficiency, and this is where Enablement comes into the picture.

Enablement Model

The enablement model would normally only apply to very large companies where adding 10 new projects (or more) per year is common. Let’s continue on with our current team. What happens when there is talk of needing a third lab and third resource? Should the team continue to clone labs and people? In large shops, there will always been too much testing to do. At some point, the performance team has to determine how to get all of the work done without adding more labs. They will need to look at how much each lab costs individually, how much software and maintenance cost yearly, how many new projects and existing projects are being supported each year. What is the ROI from each lab individually? Is the development team mature enough and organized enough to handle this model? Is there commitment from all teams and management? Is the performance team mature enough to handle this? Stated another way; does the team have a way to maintain organized processes? Can they administrate control over tests so that this does not turn into a huge chaotic mess putting you back to square one? What would happen if everyone started performance testing? How big would the performance team have to be? How much hardware would they require? How much time will it take to understand the science projects where only a few developers know why they want to test it in the first place? These are the kinds of questions the performance team should consider before moving toward the Enablement model.

If you have decided that the time has come for Enablement, specific team members on the development of business analyst side need to be selected and given the ability to run their own science experiments and projects, while the performance team works on core projects that have high risks or high profiles. If there are multiple development teams, one person from each should be selected as the contact point for performance. That person would be responsible for all of the science projects and component testing for their projects while performance team would enable them by providing standardized results, requirements, scheduling, etc… The resource of choice would probably be a senior developer who has a vital interest in making sure the testing gets done (like they’ll be fired if they don’t). The testing could also be done by a Business Process Manager or another member of the team. The performance team will probably help in setting up the scripts and test runs. They will train the person executing the tests how to run them multiple times, saving their off the results for later analysis. Testing in the view of this resource is simply a “stop and replay” activity because the performance team hides the complexity of the set up from them. Hey, I never said the performance team shouldn’t have to do any work!

The downside to the Enablement model is the potential for chaos. As with two labs, mechanisms for control must be in place to know who is testing what systems and when. Performance testing tools will need to be moved to a web interface and have built in management capabilities. A repository for reports with standardized deliverables will help bring order to the output of all the testing going on. In other words, some of the organization must be built into the performance testing tool itself. I believe organizational maturity with solid, documented processes is the biggest consideration for moving to the Enablement model. In order to be most effective, following the appropriate processes should be tied directly to the employees yearly evaluation and affect salary increases. This keeps everybody honest and away from the backdoor handshakes that often occur in order to meet strategic dates. Time to market is important, but not at the risk of product failure.

Summary

With Encapsulation, there is the advantage of having a trained knowledge group with intellectual capital and capabilities that reduce the per-unit-cost of testing. However, other efficiencies are gained when performance considerations are brought in earlier in the development lifecycle. Rather than waiting to get lab time, allowing development to have exposure to the tools and methods enables performance to be engineered into the product from the beginning rather than just making it a phase in the lifecycle. At that point, integrated performance testing is never much of a surprise, and most likely a simple checkpoint. Management is able to change their expectations of a products scalability based on early testing at the development stage. The Encapsulated model can help you produce results the fastest, but the only way to continue growing as a Center of Excellence is either a big budget or moving to the Enablement model.