Performance Testing 101: LoadRunner Scenario Design Approach

Posted on Jun, 2013 by Admin

This is the sixth installment in a multi-part series to address the basics of performance testing applications. It’s for the beginner, but it is also for the experienced engineer to share with project team members when educating them on the basics as well. Project Managers, Server Team members, Business Analysts, and other roles can utilize this information as a guide to understanding performance testing process, the typical considerations, and how to get started when you have never embarked on such a journey.

One key area of scenario design is the distinction between users, vusers, and transactions. Users and vusers (virtual users) are not necessarily equal. LoadRunner has to run vusers and a translation needs to be done between real users and virtual users. Typically, vusers are more aggressive than real users so fewer vusers can do more work than a larger number of real users. For this reason, defining a test by the number of users isn’t the best approach. Instead, the keystone should be transaction volume. There could easily be one user performing a 1000 transactions in an hour, or you can have 1000 users each performing 1 transaction in an hour. 1000 transactions will be the end result either way. The goal is to find a happy medium between these two extremes. That is where determining the transaction rate is the most important characteristic of the load test. Typically this is done at the peak hourly rate, so determining the total peak number of transactions per hour should determine the scenario instead of peak number of users.

During scenario execution, virtual user numbers are ramped up at a moderate rate (to prevent an unrealistic startup overload) until the maximum number of vusers is running concurrently. The goal is to maintain the maximum user load at a steady state for a duration that will yield meaningful statistical data.

For the purpose of benchmarking a consistent scenario will be used in which pacing and think times are set to fixed values. This allows for comparison between two or more executions. This type of scenario also results in a steady transaction rate with minimal peaks and troughs that can otherwise be difficult to recreate in a consistent manor. When compared to a random/real world scenario there is little to no distinction.

Pacing is calculated by dividing one hour by the number of iterations per hour. In each script there is a think time between each transaction. There is no think time before the first transaction or after the last one, thus the total number of think times was the number of transactions minus one. Pacing acts as a buffer between the last and next transaction to artificially add a think time. A think time buffer is also used. The think time buffer is the estimated time to execute the average transaction. Typically a buffer of five seconds is used. To calculate the think time multiply the buffer time by the number of transactions and subtract it from the pacing value. That value is then divided by the number of transactions minus one.

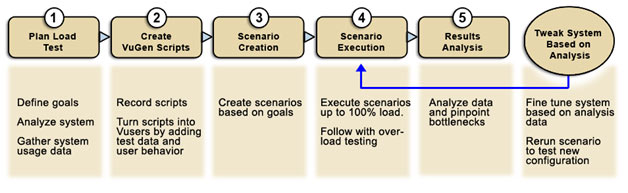

The LoadRunner Performance Testing Process

Below is a graphic that details the LoadRunner Performance Testing Process. This document covers the first step, Plan Load Test. The QRM team will take the information gathered from this document to continue with the rest of the processes. It is possible that additional information may be needed to complete scripting and if issues are found to help investigate them. Please note that performance testing is a cyclical process. It shouldn’t be expected that the test will only be executed one time. Typically, issues are found and retesting is required until expected performance is met.

Project Timelines

No project is a project without some kind of project plan. Of course no two projects are the same but luckily performance testing doesn’t vary greatly from one project to another. Typically the biggest deciding factor is script creation. Of course there is always the unknown of scenario execution and if any issues are found. Here is a general outline of how the Project Plan should be developed.

- Discovery – (1 week) – This step of the project covers gathering of information and performing kickoff meetings with the application teams.

- Planning – (1 week) – During this phase the Performance Test Plan is created and the business processes are identified. Data requirements and business process steps are documented.

- Scripting – (2 days/script) – Scripts are recorded, modified, and debugged.

- Scenario Execution (2 weeks) – The first few days cover debugging runs and then running baseline tests. This also allows some time to identify issues and rerun tests. If multiple types of tests are expected, duration and stress for example, please add approximately two days per execution.

- Analysis and Reporting (1 week) – Compile results and creation of a final report.

Based on a typical 5 business process scenario expect 6 weeks to perform the necessary performance testing. Packaged applications with a higher level of complexity, or lower level protocols that require more debugging time will mean longer planning and scripting time.

In the final installment of this blog series we will outline a performance testing questionnaire.